I Tried the 48GB RTX 8000 for Local LLMs – Just to See If It Could Beat the 3090s

For enthusiasts like us, cobbling together systems for local Large Language Model inference is a continuous quest for that perfect balance of VRAM, performance, and cost. One such card that frequently enters the discussion for its impressive 48GB of GDDR6 VRAM is the Nvidia Quadro RTX 8000, especially given its availability on the used market for around $2250.

It’s an older Turing-architecture professional card, but that VRAM figure is tantalizing for running today’s increasingly large models. I decided to get my hands on one and see exactly what this aging behemoth can deliver for local LLM tasks, particularly with popular quantized models.

A Look at the Hardware and Its Quirks

The Quadro RTX 8000 is built on the TU102 die, similar to the GeForce RTX 2080 Ti, but with all 4608 CUDA cores enabled and, crucially, that 48GB of VRAM on a 384-bit bus, delivering 672 GB/s of memory bandwidth. Its dual-slot blower design is a practical advantage, especially if you’re considering multi-GPU configurations, as it exhausts heat directly out of the case. Power consumption is also relatively modest for its VRAM capacity, with a TDP of 260W.

However, its Turing architecture comes with certain limitations compared to newer Ampere or Ada Lovelace cards. Native BF16 support is absent, meaning any operations requiring it will likely fall back to FP16. Furthermore, support for some of the latest CUDA kernels and optimizations, like Flash Attention 2 through standard libraries, can be lacking or require more effort to implement. In fact, during my testing, I found that I was unable to run models quantized with ExllamaV2’s newer 3-bit “V3” quantizations, as the Exllamav2 kernels for these often require Ampere (30-series) or newer GPUs.

This is a critical point for anyone looking to leverage the very latest in quantization techniques for maximum model size per VRAM.

Testing Methodology

My goal was to assess real-world inference performance. I focused on two popular LLM backends: Exllama, using 5.0 bits-per-weight (bpw) quantization, and llama.cpp using Q4_K_M GGUF quants with OpenWebUI as the front-end.

The primary models for testing were Meta’s Llama 3.3 70B and Qwen’s Qwen3 30B A3B (MoE model). I measured prompt processing speed (tokens/second) and token generation speed (tokens/second), as these are the key metrics for interactive LLM use.

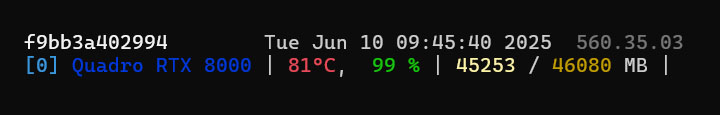

The system I used was Ubuntu 22.04 LTS with Nvidia driver version – 560.35.03.

Exllama 5.0bpw Performance

Starting with Exllama and its 5.0bpw quantizations, the Llama 3.3 70B model showed a generation speed of 12.81 tokens per second with a short context, alongside a prompt processing speed of 36.18 tokens per second. As context length increased, generation speeds predictably dropped. At an 8K context, prompt processing climbed to an impressive 439.79 t/s, but generation speed fell to 5.09 t/s. Pushing further to a 16K context, prompt processing was still strong at 387.13 t/s, with generation at 2.65 t/s.

Finally, at a demanding 25K context, the RTX 8000 managed 324.46 t/s for prompt processing and 1.8 t/s for generation. These numbers, while not groundbreaking, demonstrate the card’s ability to handle very long contexts, albeit with a trade-off in generation speed.

| Model | Context Length | Prompt Processing (tokens/sec) | Token Generation (tokens/sec) |

|---|---|---|---|

| Llama 3.3 70B | Short | 36.18 | 12.81 |

| Llama 3.3 70B | 8K | 439.79 | 5.09 |

| Llama 3.3 70B | 16K | 387.13 | 2.65 |

| Llama 3.3 70B | 25K | 324.46 | 1.80 |

| Qwen3 30B A3B | Short | 13.29 | 43.00 |

| Qwen3 30B A3B | 8K | 913.46 | 12.35 |

| Qwen3 30B A3B | 16K | 963.30 | 7.42 |

For the smaller Qwen1.5 32B model using Exllama 5.0bpw, results were quite different. With a short context, prompt processing was a modest 13.29 t/s, but token generation was a brisk 43.0 t/s. With an 8K context (specifically 8754 tokens in my test), prompt processing soared to 913.46 t/s while generation settled at 12.35 t/s. At a 16K context (16159 tokens), prompt processing reached 963.3 t/s with generation at 7.42 t/s. The high generation speed for short context on the 32B model is noteworthy.

llama.cpp Q4_K_M Performance

Switching to llama.cpp with Q4_K_M GGUF files, the Llama 3.3 70B model delivered a prompt processing speed of 187.98 tokens per second and a generation speed of 10.43 tokens per second for a short context (46 prompt tokens). With an 8K context (8077 prompt tokens), prompt processing was 231.53 t/s, and generation speed was 7.59 t/s.

These speeds are certainly usable, especially considering the model size. Some community members have reported that specific backends like koboldcpp, when configured with rowsplit, llama.cpp’s built-in flash attention, and mmq kernel options, can achieve slightly higher speeds, perhaps around 12-14 tokens per second for a 70B Q5 model, which is in the same ballpark as my findings.

| Model | Context Length | Prompt Processing (tokens/sec) | Token Generation (tokens/sec) |

|---|---|---|---|

| Llama 3.3 70B | Short (46 tokens) | 187.98 | 10.43 |

| Llama 3.3 70B | 8K | 231.53 | 7.59 |

| Qwen3 30B A3B | 8K | 950.42 | 34.24 |

| Qwen3 30B A3B | 16K | 673.27 | 21.29 |

| Qwen3 30B A3B | 32K | 344.77 | 11.22 |

The Qwen3 30B A3B model on llama.cpp with Q4_K_M quantization also performed well. At an 8K context (8080 prompt tokens), it achieved a prompt processing speed of 950.42 t/s and a generation speed of 34.24 t/s. Increasing to a 16K context (15706 prompt tokens), prompt processing was 673.27 t/s and generation was 21.29 t/s. At a substantial 32K context (31772 prompt tokens), the card managed 344.77 t/s for prompt processing and 11.22 t/s for generation.

The RTX 8000 vs. Dual RTX 3090s

When comparing the Quadro RTX 8000 against a dual RTX 3090 setup, the financial and performance trade-offs become stark. While the RTX 8000 provides its 48GB of VRAM for around $2250, two used RTX 3090s can match this capacity for approximately $1800, immediately presenting a cost advantage for the latter. This is compounded by the raw performance disparity; the RTX 3090’s Ampere architecture, with its superior 936 GB/s memory bandwidth compared to the 8000’s 672 GB/s, translates directly into faster inference.

My own tests on the Qwen1.5 32B model (Q4_K_M, 32K context) showed a single RTX 3090 delivering around 35 t/s for generation and 750 t/s for prompt processing, far outpacing the RTX 8000’s 11 t/s and 344 t/s respectively. This trend continues with larger models like Llama 3.3 70B, where a dual RTX 3090 setup typically offers around 16 t/s, while my RTX 8000 tests yielded 7.59 to 10.43 t/s, confirming it’s roughly half as fast for the same VRAM. Furthermore, RTX 3090s benefit from broader software support, including more mature Flash Attention 2 implementations and compatibility with newer quantization techniques that the RTX 8000’s Turing architecture often lacks.

Despite the performance deficit, the RTX 8000 does present compelling practical advantages in specific scenarios. Its ability to pack 48GB of VRAM into a single PCIe slot, coupled with an efficient dual-slot blower cooler, is a significant boon for system builders constrained by chassis dimensions, limited PCIe availability, or tighter power supply budgets—its 260W TDP is notably more modest than the potential 700W draw from two high-power RTX 3090s.

This streamlined integration of a single high-VRAM card can simplify a build considerably, sidestepping the more complex considerations of cooling, power delivery, and motherboard compatibility inherent in a dual RTX 3090 configuration. Thus, the choice hinges on whether these physical integration benefits outweigh the clear performance-per-dollar lead of the Ampere alternative.

Final Thoughts

Ultimately, while the Quadro RTX 8000 demonstrates some unique strengths, for my own build considerations and at its current market price of around $2250, purchasing it instead of a dual RTX 3090 setup doesn’t quite add up from a pure price-to-performance standpoint. The Ampere-based 3090s generally offer better raw speed and broader, more current software support for a potentially lower total cost to achieve 48GB of VRAM.

Nevertheless, the RTX 8000 does carve out a specific niche. If your absolute top priority is maximizing VRAM within a single PCIe slot, significantly simplifying the physical build of a high-VRAM system, or ensuring lower overall power consumption for a 48GB+ configuration, then it remains a noteworthy card. It’s a solution for the informed enthusiast who deeply understands these specific trade-offs and highly values its exceptional VRAM density and multi-GPU friendliness in a compact form factor, perhaps prioritizing those particular system design aspects over chasing the absolute highest tokens-per-second that alternative configurations might offer.

Allan Witt

Allan Witt is Co-founder and editor in chief of Hardware-corner.net. Computers and the web have fascinated me since I was a child. In 2011 started training as an IT specialist in a medium-sized company and started a blog at the same time. I really enjoy blogging about tech. After successfully completing my training, I worked as a system administrator in the same company for two years. As a part-time job I started tinkering with pre-build PCs and building custom gaming rigs at local hardware shop. The desire to build PCs full-time grew stronger, and now this is my full time job.Related

Desktops

Dell refurbished desktop computers

If you are looking to buy a certified refurbished Dell desktop computer, this article will help you …

Guides

Dell Outlet and Dell Refurbished Guide

For cheap refurbished desktops, laptops, and workstations made by Dell, you have the option to use …

Guides

Refurbished, Renewed, Off Lease

When you are looking for refurbished computer, you often see – certified, renewed, and off-lease placed in …

Laptops

Excelent Refurbished ZenBook Laptops

If you are looking for a compact ultrabook and a reasonable price, consider a refurbished Asus Zenbook …

0 Comments