I Tested Dual RTX 5060 Ti 16GB vs RTX 3090 for Local LLMs – Here’s What Surprised Me

The landscape of local Large Language Model (LLM) inference is constantly evolving, and for us enthusiasts who build and tweak our own rigs, the hunt for the perfect balance of VRAM, performance, and price is a perpetual quest. With the (hypothetical, as of my testing timeframe) arrival of cards like the NVIDIA RTX 5060 Ti 16GB, new possibilities emerge.

I’ve been particularly intrigued by the prospect of a dual RTX 5060 Ti 16GB setup. How does it stack up against a stalwart of the used market, the mighty RTX 3090, especially when we’re pinching pennies but still demand serious VRAM? I decided to put them to the test, and here’s what I’ve uncovered.

Specs and Price Showdown (June 2025)

As we peer into June 2025, the market presents some interesting options. A used RTX 3090, with its generous 24GB of VRAM, can be found for around $850 to $900. On the other hand, securing two new RTX 5060 Ti 16GB cards would set us back approximately $950. The RTX 5060 Ti, boasts 16GB of GDDR7 memory, 4608 CUDA cores, and a memory bandwidth of 448 GB/s, all within a 180W TDP and utilizing a PCIe 5.0 x8 interface. This positions a dual-card setup as a VRAM option for under a grand.

Let’s break down the key specifications side-by-side:

| Feature | Dual RTX 5060 Ti 16GB (Hypothetical) | Single RTX 3090 (Used) |

|---|---|---|

| GPU Configuration | 2x NVIDIA RTX 5060 Ti | 1x NVIDIA RTX 3090 |

| Total VRAM | 32GB (16GB per card) GDDR7 | 24GB GDDR6X |

| Memory Bandwidth | 448 GB/s | 936 GB/s |

| CUDA Cores (Total) | 4608 | 10496 |

| TDP (Total for GPUs) | ~360W (180W per card) | ~350W |

| Interface | PCIe 5.0 x8 | PCIe 4.0 x16 |

| Est. Cost (June 2025) | ~$950 (New) | ~$850 – $900 (Used) |

The immediate takeaway is the VRAM advantage for the dual 5060 Ti setup – a full 32GB. However, the RTX 3090 counters with more than double the memory bandwidth on a single card. This sets the stage for an interesting performance trade-off.

Benchmarking Rig and Methodology

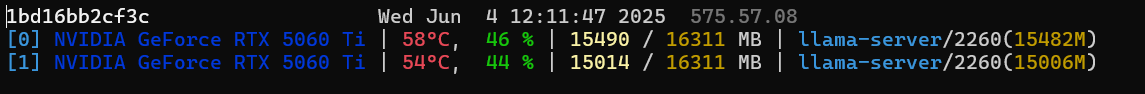

To get to the bottom of this, I ran a series of tests on a system running Ubuntu 22.04 LTS with Nvidia driver version – 575.57.08. My inference stack consisted of llama.cpp server and OpenWebUI as the front-end. I focused on two Unsloth dynamic quant models, both in 4-bit GGUF quantization:

- Qwen3-30B-A3B-128K-UD-Q4_K_XL: A Mixture-of-Experts (MoE) model, generally more forgiving on VRAM bandwidth for its size.

- Qwen3-32B-UD-Q4_K_XL: A dense model, which typically stresses memory bandwidth more heavily for token generation.

My goal was to measure prompt processing speed (tokens/second) and, more crucially for interactive use, token generation speed (tokens/second) across various context lengths.

Dual RTX 5060 Ti 16GB

The primary allure of the dual RTX 5060 Ti setup is its 32GB VRAM pool. This allows us to not only load larger models but also to push context lengths further or even use higher precision quantization.

For instance, with 32GB, a 30B parameter Qwen model like Qwen3 30B A3B could potentially be run at 6-bit quantization (requiring roughly 25 GB for weights), leaving lots of room for context. This is a feat the 24GB RTX 3090 would struggle with, likely being limited to 5-bit quantization (around 21GB for weights) with less overhead for extensive context.

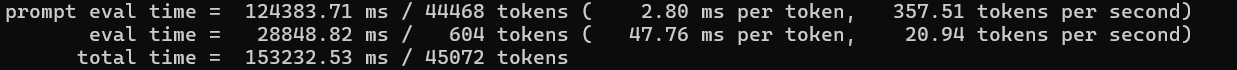

In my tests with 4-bit quantized models, the dual RTX 5060 Ti 16GB configuration demonstrated its capacity to handle long contexts. With the Qwen3 30B A3B model, I was able to reach a maximum context size of approximately 44,000 tokens and still get a usable answer from the model.

Here’s a snapshot of the performance I observed:

Dual RTX 5060 Ti 16GB Performance (llama.cpp, Ubuntu 22.04 LTS, OpenWebUI)

| Model | Context Size (Tokens) | Prompt Eval (s) | Prompt Eval Speed (t/s) | Token Gen Speed (t/s) |

|---|---|---|---|---|

| Qwen3 30B A3B | ||||

| ~1,600 | 1.15 | 1422.67 | 80.83 | |

| ~14,000 | 17.74 | 797.23 | 44.94 | |

| ~32,000 | 70.19 | 459.63 | 26.04 | |

| ~44,000 | 124.38 | 357.51 | 20.94 | |

| Qwen3 32B | ||||

| ~1,600 | 2.56 | 642.11 | 17.88 | |

| ~10,000 | 28.81 | 378.24 | 13.15 | |

| ~12,000 | 34.30 | 358.44 | 12.89 | |

| ~14,000 | 46.30 | 320.04 | 12.14 | |

| ~18,000 | 62.84 | 288.98 | 12.39 |

The token generation speeds are respectable, especially considering the price point and VRAM on offer. The ability to work with 44K context on a 30B MoE model is a significant win for tasks requiring deep understanding of large documents.

RTX 3090 24GB

The RTX 3090, even as a used part, remains a great value card. Its key advantage is the 936 GB/s of memory bandwidth. This directly impacts how quickly the GPU can feed data to its cores during the token generation phase, which is critical for perceived LLM responsiveness. While its 24GB of VRAM is less than the dual 5060 Ti setup, it’s still ample for many large models, especially at 4-bit quantization.

In my testing, the RTX 3090 managed a maximum context of around 32,000 tokens with the Qwen3 30B A3B model, which is substantial, though less than the dual 5060 Ti.

Here’s how the RTX 3090 performed:

RTX 3090 Performance (llama.cpp, Ubuntu 22.04 LTS, OpenWebUI)

| Model | Context Size (Tokens) | Prompt Eval (s) | Prompt Eval Speed (t/s) | Token Gen Speed (t/s) |

|---|---|---|---|---|

| Qwen3 30B A3B | ||||

| ~1,600 | 0.90 | 1818.64 | 104.52 | |

| ~14,000 | 11.64 | 1214.24 | 58.64 | |

| ~32,000 | 46.52 | 692.22 | 28.01 | |

| Qwen3-32B | ||||

| ~1,600 | 1.62 | 1012.93 | 30.75 | |

| ~10,000 | 16.88 | 645.58 | 24.52 |

As expected, the RTX 3090 flexes its bandwidth muscle, delivering faster token generation speeds, particularly with the dense Qwen3 32B model.

Head-to-Head: Analyzing the Performance

Comparing these two setups reveals a clear trade-off. The RTX 3090 is undeniably faster in raw token generation. With the dense Qwen3-32B-UD-Q4_K_XL model, the RTX 3090 was, on average, around 70-85% faster in token generation than the dual RTX 5060 Ti setup across the tested context lengths.

For instance, at a ~1.6k token context, the 3090 churned out 30.75 tokens/second versus the 5060 Ti pair’s 17.88 tokens/second. This speed advantage is significant if your primary workload involves models that heavily tax memory bandwidth.

However, the story changes somewhat with the Mixture-of-Experts model, Qwen3 30B A3B. Here, the token generation speed difference was less pronounced. The RTX 3090 was generally around 29-30% faster at moderate context lengths (e.g., ~1.6k and ~14k tokens). This is because MoE models often don’t utilize all their parameters for every token, reducing the sustained bandwidth pressure compared to dense models.

What truly surprised me was the performance at very large context windows with the MoE model. At a 32,000 token context with Qwen3 30B A3B, the dual RTX 5060 Ti 16GB setup was only about 7% slower in token generation (26.04 t/s) than the RTX 3090 (28.01 t/s).

This is remarkable, given the RTX 3090 has roughly double the per-card memory bandwidth. It suggests that at extreme context lengths, other factors might come into play, or perhaps the way llama.cpp manages memory across GPUs for such large contexts becomes highly efficient, somewhat leveling the playing field when VRAM capacity is abundant.

And, of course, the dual RTX 5060 Ti setup offers more VRAM (32GB vs 24GB), enabling it to handle a ~44K token context with the Qwen3 30B A3B model, whereas the RTX 3090 topped out around 32K in my tests. This extra capacity is a clear win if you need to process extremely long prompts or documents.

Practicalities of a Dual 5060 Ti System

Opting for a dual RTX 5060 Ti 16GB setup isn’t just about buying two cards; it requires some system planning. You’ll need a motherboard with at least two PCIe x8 or x16 slots, ideally with good spacing between them to allow for adequate airflow, especially if the cards use open-air coolers rather than blowers.

Power-wise, the combined TDP of around 360W for the GPUs, plus the rest of the system, means an 800W PSU would be a sensible choice to ensure stability and provide comfortable headroom. Case airflow is also paramount to prevent thermal throttling. Software-wise, llama.cpp handles multi-GPU fairly well, but as with any multi-GPU configuration, occasional driver quirks or specific setup steps might be necessary.

Which GPU Setup Wins for Local LLMs?

So, which setup is the better choice for the local LLM enthusiast in June 2025? At roughly $950 for the dual RTX 5060 Ti 16GB pair versus $850 − $900 for a used RTX 3090, the price difference is not enormous.

If maximum token generation speed for models that fit within 24GB is your absolute priority, and your workloads frequently involve dense models, the RTX 3090 still holds an edge due to its superior memory bandwidth. It’s a simpler, single-card solution that delivers impressive performance.

However, if your work involves very large context windows, or if you aim to run models at higher precision quantization (like 6-bit for 30B models), the dual RTX 5060 Ti 16GB setup becomes incredibly compelling.

The 32GB of VRAM is a significant advantage, and its performance, especially with MoE models and at very large contexts, is more than respectable. It’s a viable option that provides a larger memory pool than a 3090, and the performance can be surprisingly close in certain scenarios, making that extra ~$50-100 investment for more VRAM quite attractive.

Ultimately, as the user note aptly put it, the decision largely boils down to how much context you anticipate using regularly. If you’re consistently pushing the boundaries of VRAM with large prompts, the dual RTX 5060 Ti 16GB configuration offers a pathway that the RTX 3090 simply can’t match in terms of sheer capacity.

Upgrade Paths and Future Gazing

For those considering an upgrade path, starting with a single RTX 5060 Ti 16GB offers the flexibility to add a second card later, effectively doubling VRAM. This staged approach can be easier on the wallet. If you already own an RTX 3090 and are VRAM-limited, adding a second 3090 (if your system and budget permit) is an option, or you might be looking towards even higher-end (and currently much pricier) cards like the RTX 5090 or future generations.

The used market for RTX 3090s is seeing a downward price trend, which might make them even more attractive if prices fall further, narrowing the value proposition against newer dual-card setups. On the other hand, the RTX 5060 Ti, being a (hypothetically) more recent “current-gen” offering in the 5000-series, might see its price remain relatively stable for a while, especially if demand for its 16GB VRAM variant is high among LLM users.

From my perspective, the dual RTX 5060 Ti 16GB configuration has proven itself to be a surprisingly potent and versatile option for local LLM inference. It’s a testament to how creative hardware combinations can unlock significant capabilities for the budget-conscious, technically savvy enthusiast. The VRAM is plentiful, the performance is solid, and the path it opens for handling ever-larger models and contexts is undeniably exciting.

Allan Witt

Allan Witt is Co-founder and editor in chief of Hardware-corner.net. Computers and the web have fascinated me since I was a child. In 2011 started training as an IT specialist in a medium-sized company and started a blog at the same time. I really enjoy blogging about tech. After successfully completing my training, I worked as a system administrator in the same company for two years. As a part-time job I started tinkering with pre-build PCs and building custom gaming rigs at local hardware shop. The desire to build PCs full-time grew stronger, and now this is my full time job.Related

Desktops

Dell refurbished desktop computers

If you are looking to buy a certified refurbished Dell desktop computer, this article will help you …

Guides

Dell Outlet and Dell Refurbished Guide

For cheap refurbished desktops, laptops, and workstations made by Dell, you have the option to use …

Guides

Refurbished, Renewed, Off Lease

When you are looking for refurbished computer, you often see – certified, renewed, and off-lease placed in …

Laptops

Excelent Refurbished ZenBook Laptops

If you are looking for a compact ultrabook and a reasonable price, consider a refurbished Asus Zenbook …

0 Comments