LLM Hardware News

Latest LLM hardware news! Discover GPU releases, memory bandwidth advancements, and new platforms for running large language models locally.

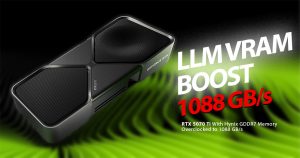

Local LLM Inference Just Got Faster: RTX 5070 Ti With Hynix GDDR7 VRAM Overclocked to 1088 GB/s Bandwidth

Inference, VRAM

New Chinese Mini-PC with AI MAX+ 395 (Strix Halo) and 128GB Memory Targets Local LLM Inference

Strix Halo

Smarter Local LLMs, Lower VRAM Costs – All Without Sacrificing Quality, Thanks to Google’s New QAT Optimization

VRAM

Arc GPUs Paired with Open-Source AI Playground Offer Flexible Local AI Setup

GPU

RTX 5060 Ti for Local LLMs: It’s Finally Here – But Is It Available, and Is the Price Still Right?

Dual RTX 5060 Ti: The Ultimate Budget Solution for 32GB VRAM LLM Inference at $858

GPU

55% More Bandwidth! RTX 5060 Ti Set to Demolish 4060 Ti for Local LLM Performance

GPU

AMD Targets Faster Local LLMs: Ryzen AI 300 Hybrid NPU+iGPU Approach Aims to Accelerate Prompt Processing

Inference, Strix Halo, Unified memory

Llama 4 Scout & Maverick Benchmarks on Mac: How Fast Is Apple’s M3 Ultra with These LLMs?

Benchmarks, Mac