FEVM FA-EX9 Mini PC Benchmarked: 128GB Strix Halo for Local LLM Inference

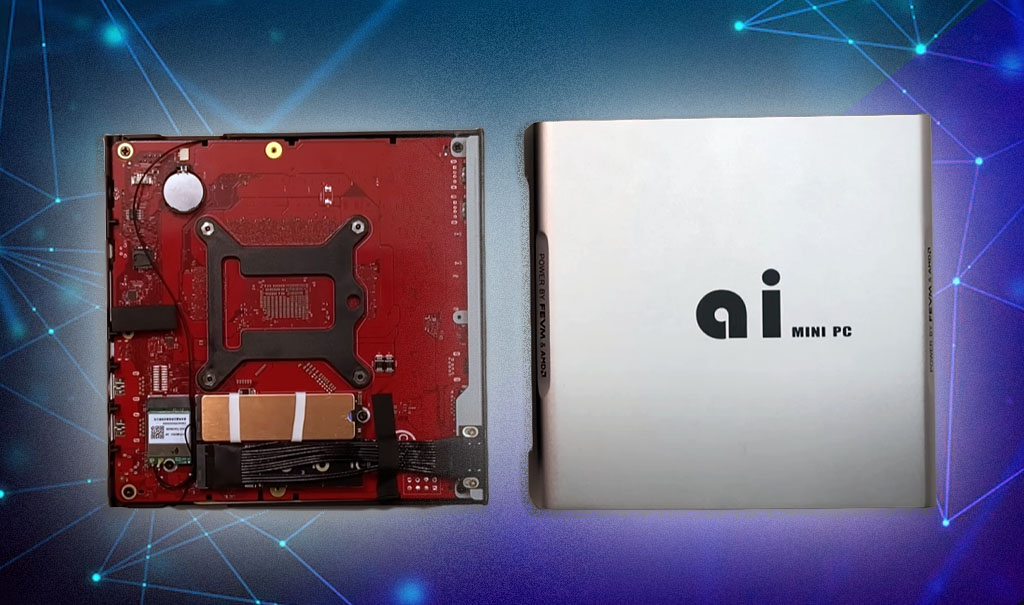

The arrival of AMD’s Ryzen AI MAX+ 395 “Strix Halo” APU has generated considerable interest among local LLM enthusiasts, promising a potent combination of CPU and integrated graphics performance with substantial memory capacity. One of the first systems to showcase this new silicon is the FEVM FA-EX9 Mini PC.

Following recent news announcements detailing its specifications, we now have some initial insights into its LLM inference capabilities, primarily from testing conducted by the “jack stone youtube channel“, which offer a glimpse into what users can expect.

Strix Halo’s Proposition for Local LLM Inference

The core of the FEVM FA-EX9 is the AMD Ryzen AI MAX+ 395, an APU featuring 16 Zen 5 CPU cores and an integrated Radeon 8060S GPU with 40 RDNA 3.5 Compute Units. For LLM workloads, the standout feature is its support for up to 128GB of LPDDR5X system memory.

The platform allows a significant portion of this unified memory to be allocated as VRAM – the reviewed FA-EX9 unit was tested with 64GB dedicated to video memory, though the BIOS allows for up to 96GB in Windows. This large, configurable VRAM pool is critical for loading larger quantized models that would typically overwhelm consumer discrete GPUs.

Memory bandwidth is a crucial factor for token generation speed. The reviewed system demonstrated a memory bandwidth of up to 211 GB/s (theoretical max 256 GB/s). While this is a substantial figure for an integrated solution and a notable step up from standard DDR5, it’s a metric where discrete GPUs still hold a significant advantage, impacting peak inference throughput, especially on very large models.

FEVM FA-EX9: LLM Inference Benchmarks

The preliminary benchmarks provide a practical look at the FA-EX9’s performance across a range of quantized models. The tests were reportedly run with 64GB of the system’s 128GB LPDDR5X-8000 memory allocated as dedicated video memory.

Here’s a summary of the reported LLM inference speeds:

| Model | Quantization | VRAM Used | Inference Speed (tokens/second) |

|---|---|---|---|

| Llama 3.1 8B | Q4 | 5 GB | 36 |

| Qwen3 14B (Dense) | Q4 | 9 GB | 20 |

| Qwen3 32B (Dense) | Q4 | 20 GB | 9 |

| Qwen3 30B MoE | Q4 | 20 GB | 52 |

| Qwen3 30B MoE | Q8 | 31 GB | 41 |

| Qwen3 30B MoE | BF16 | 58 GB | 8 |

| DeepSeek Llama 3 70B | Q4 | 37 GB | 5 |

The performance with the Llama 3.1 8B model, achieving 36 tokens/second, was noted in the original review to be in a similar performance bracket to what one might see with mobile discrete GPUs like an NVIDIA GeForce RTX 4060M or RTX 4070M for that specific model size. This provides a useful, albeit rough, point of reference for users familiar with laptop-class discrete GPU performance.

For larger models, the numbers reflect the trade-offs inherent in current APU technology. The Qwen3 32B dense model ran at 9 tokens/second, utilizing 20GB of VRAM. The Qwen3 30B Mixture-of-Experts (MoE) model, which has a smaller active parameter count during inference despite its larger loaded size, showed impressive speeds with 4-bit quantization (Q4) at 52 tokens/second.

This highlights the efficiency gains possible with MoE architectures on memory-rich platforms. As expected, moving to Q8 and particularly BF16 quantization for the MoE model significantly increased VRAM consumption and reduced token throughput.

The DeepSeek distilled Llama 3 70B model managed 5 tokens/second while consuming 37GB of VRAM. While functional, this speed for a 70B-class model underscores the bandwidth limitations compared to high-end discrete GPU setups when aiming for highly interactive speeds.

However, the ability to load such a model entirely into the APU’s VRAM without resorting to slower system RAM offloading (or split GPU setups) is a key advantage of the Strix Halo platform.

Performance Context and Upgrade Path

The FEVM FA-EX9, like other forthcoming Strix Halo-based mini-PCs such as those from GMKtec, Beelink, and Zotac, is carving out a niche for users who prioritize memory capacity in a compact, power-efficient form factor. The primary appeal is the ability to run 30B to 70B parameter models (quantized) locally without the expense or complexity of multi-GPU desktop rigs or power-hungry workstation cards.

While the raw token-per-second figures for the largest models might not rival dedicated NVIDIA solutions with their high-bandwidth HBM or GDDR6X memory, the Strix Halo platform offers a more integrated and potentially more affordable entry point for experimenting with these larger models. The FA-EX9’s inclusion of an OCuLink port is a particularly noteworthy feature for the enthusiast. This provides a direct PCIe connection for an external GPU enclosure, offering an upgrade path to augment the iGPU’s capabilities. Users could, for example, add a discrete GPU later to accelerate prompt processing (prefill) or to handle even larger models, while still benefiting from the APU’s resources and large system memory pool.

For users deeply invested in local LLM inference, the FEVM FA-EX9 and the broader Strix Halo ecosystem represent an interesting development. The ability to configure up to 110GB of VRAM on the iGPU opens doors for models that were previously out of reach for compact systems. While outright speed for the very largest models will still be the domain of more powerful (and expensive) discrete hardware, the FA-EX9 offers a compelling blend of memory capacity, reasonable performance for small to medium-large models, and a compact footprint, making it a system worth watching as more detailed third-party testing emerges.

Leave a Reply

No comments yet.